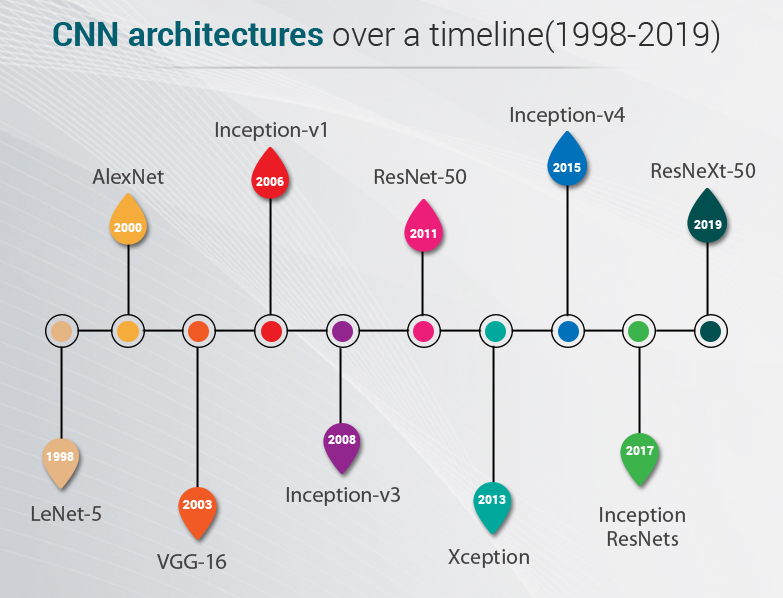

CNN Architectures Over a Timeline (1998-2019)

Convolutional neural networks (CNN) are among the more popular neural network frameworks that are used in complex applications like deep learning models for computer vision and image recognition.

Over the years, CNNs have undergone a considerable amount of rework and advancement. This has left us with a plethora of CNN models. Let’s discuss the more important CNNs out of all these variants.

LeNet-5

Architecture: LeNet-5 has 2 convolutional and 3 fully connected layers. It has trainable weights and a sub-sampling layer (now known as the pooling layer). LeNet5 has about 60,000 parameters.

Year of Release: 1998

About: Developed by Yann LeCunn as he applied a backdrop style to Fukushima’s convolutional neural network architecture.

USP: LeNet5 can be considered the standard template for all modern CNNs as all CNNs follow the pattern of stacking convolutional and pooling layers, and terminating the model with one or more fully-connected layers.

AlexNet

Architecture: AlexNet has 8 layers, 3 fully-connected and 5 convolutional. AlexNet had 60 million parameters.

Year of Release: 2012

About: On the date of its publication, the authors of AlexNet believed that it was the largest neural network on the subsets of ImageNet.

USP: AlexNet developers successfully used overlapping pooling and Rectified Linear Units (ReLUs, as activation functions).

VGG-16

Architecture: VGG-16 has 13 convolutional and 3 fully-connected layers. It used ReLUs as activation functions, just like in AlexNet. VGG-16 had 138 million parameters. A deeper version, VGG-19, was also constructed along with VGG-16.

Year of Release: 2014

About: Believing that the best way to improve the efficiency of a CNN was to stack more layers onto it, developers at Visual Geometry Group (VGG) developed VGG-16 and VGG-19.

USP: First among the deeper CNNs.

Inception-v1

Architecture: Inception-v1 heavily used the Network in Network approach and had 22 layers along with 5 million parameters.

Year of Release: 2014

About: This network was a result of a study on approximating sparse architectures. The strongest feature of this network was the improved usage of computer resources inside the neural network.

USP: Instead of stacking convolutional layers atop each other, this network stacked dense modules which had convolutional layers within them.

Inception-v3

Architecture: A successor to Inception-v1, Inception v-3 had 24 million parameters and ran 48 layers deep.

Year of Release: 2015

About: Inception v3 could classify images into a total of 1000 categories, including keyboard, pencil, mouse, and many other animals. This model was trained on more than one million images from the ImageNet database.

USP: Inception v3 was among the first algorithms to use batch normalization. It also used the factorization method to have more efficient computations.

ResNet-50

Architecture: consisting of 50 layers of ResNet blocks (each block having 2 or 3 convolutional layers), ResNet 50 had 26 million parameters.

Year of Release: 2015

About: The basic building blocks for ResNet-50 are convolutional and identity blocks. To address the degradation in accuracy, Microsoft researchers added skip connection ability.

USP: ResNet-50 popularized skip connection and provided a way for developers to build even deeper CNNs without compromising accuracy. Also, ResNet-50 was among the first CNNs to have the batch normalization feature.

Xception

Architecture: Xception was 71 layers deep and had 23 million parameters. It was based on Inception-v3.

Year of Release: 2016

About: Xception was heavily inspired by Inception-v3, albeit it replaced convolutional blocks with depth-wise separable convolutions.

USP: Xception practically is a CNN based solely on depth-wise separable convolutional layers

Inception-v4

Architecture: With 43 million parameters and an upgraded Stem module, Inception-v4 is touted to have a dramatically improved training speed due to residual connections.

Year of Release: 2016

About: Developed by Google researcher, Inception v4 had undergone uniform choices for each grid size.

USP: deeper network, Stem improvements, and the same number of filters in every convolution block.

Inception-ResNets

Architecture: The Inception-ResNet had 25 million parameters and 32 towers.

Year of Release: 2017

About: It was a combination of Inception v4 and ResNet-50.

USP: Scaled up cardinality within a module.

ResNeXt-50

Architecture: At 50 layers deep and sporting 25.5 million parameters, ResNeXt-50 was trained on more than a million images from the ImageNet dataset.

Year of Release: 2017

About: An improvement over ResNet, ResNeXt-50 displayed a 3.03% error rate with a considerable relative improvement of 15%.

USP: Scaled up the cardinality dimension.

Takeaway

CNNs have improved significantly over time, mostly due to improved computing power, new ideas, experiments, and the sheer interest of the deep learning community across the globe. However, there is a lot more to know about these versatile networks. Stay tuned for more on CNN!

1-888-661-8967

1-888-661-8967